Welcome to the Developer Update for September 11.

Today’s featured world is The Forgotten Bloom by Lilly the cat.

Announcements

2025.3.3 is in Open Beta!

2025.3.3 is in Open Beta!

You can find the full open beta notes here.

However, some top-level highlights:

Instance Naming

VRC+ Subscribers can name their instance from within VRChat on the Open Beta! When creating an instance, you’ll have an option to name it – changing what it appears to other users as.

This could be useful for setting the tone, letting folks know what vibe you’re going for.

Two quick notes about this: First, we’re aware that this presents a moderation concern – so this also comes with a new way to report instances that are named inappropriately.

Second, we know that this is something you can do via the API from outside of VRChat. We’re not taking that functionality away! This is just an easy way for folks to name their instance from within VRChat.

OSC Endpoints for the Camera

We added OSC endpoints for the camera! All of the new endpoints have read/write access. There are a lot of them. Check out the full notes to see 'em all!

…and more!

…but that’s not all! There are a lot of QoL fixes and changes in this patch. Go read the full notes!

Selfie Expression – For Everyone!

Last week, we released a patch for VRChat that turned Selfie Expression on for ALL desktop users!

This means that, as of now, you no longer need VRC+ to use Selfie Expression.

Selfie Expression can track a user’s facial expressions, head, and eye movement, arms, hands, and fingers, just by using your webcam!

This system uses your camera along with your avatar’s visemes, allowing it to work with almost every avatar!

Selfie Expression requires the use of a camera and can be enabled in the Main Menu Settings, under Tracking & IK. Click “Enable Selfie Expression” to turn it on.

As a note, this feature is also available on Android – that’s been free since the feature’s inception!

For more information, we strongly suggest checking out the VRChat Wiki!

Avatar Marketplace Filters Are Coming!

One of the most requested features for the Avatar Marketplace has been some sort of way to filter avatars, making them easier to search. This has been something we’ve wanted to do from the jump, but needed to do a few things first before we were ready to ship it!

…but that time is coming, so we’re ready to share some stuff on how it’ll work.

Soon, we’ll be adding in a way to filter avatars on the Marketplace by style, performance, and platform. Users will also be able to search for avatars by name or tag, making it much easier to find avatars that are relevant to them.

Each avatar will be able to have up to 10 tags – which should help both folks selling avatars on the marketplace and buyers find each other!

Speaking of, if you are someone selling an avatar on the marketplace, make sure it’s tagged!

Marketplace Product Sorting

Do you sell stuff for your world? Are you part of the VRChat Creator Economy? Well, good news! You can easily order your products using the website:

September Downtime Postmortem

On September 10, you might’ve noticed we had a bit of downtime! A lot of times, when VRChat goes down, it’s not necessarily on us – a provider of ours might have an issue upstream, and it’s not something we can directly control.

This one, though, was on us. Here’s what happened and how we fixed it.

Summary

VRChat heavily relies on a software called Valkey to provide the API with extremely high performance caching and persistent application storage.

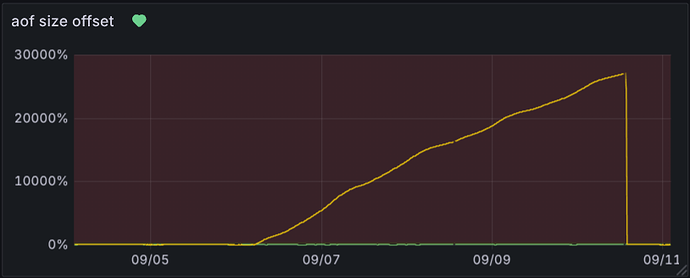

On September 6th, a background save task in one Valkey cache shard got stuck, causing Valkey’s append-only file (AOF) to slowly keep growing and eventually exhaust the local storage on September 10th.

When that happened, Valkey protected itself by blocking writes witha particular error: MISCONF Errors writing to the AOF file: No space left on device.

Due to the deep integration of Valkey into our API stack, write failures in Valkey turned into 500 Application Error responses for all our users, with some users also getting disconnected from real-time servers.

We detected the issue quickly via our monitoring alerts, but our usual metrics backend was unreachable due to a badly timed outage at our monitoring services hosting provider, which slowed down initial investigations.

So, what actually happened?

- Primary Cause: A Valkey cache shard experienced a stuck background save. Because the background save never progressed, Valkey never performed an AOF rewrite. The AOF kept growing until the storage filled, at which point all Valkey shards running on the same node began blocking writes with

MISCONFto prevent data loss or data corruption. - Compounding Factor: Our metrics backend was largely unavailable due to a poorly-timed service outage, slowing down diagnosis. Our logging system remained available and became our fallback source of truth.

- Recovery Friction: While we were able to relatively quickly identify and fix the problem, our API kept responding with the same

500 Application Error. Additionally, while trying to re-deploy the same build version of our API to clear those residual errors, our blue/green deployment failed multiple times due to intermittent API errors and network connectivity issues with the cloud provider we use for our own API, briefly leaving our load balancer in a misconfigured state that further prolonged the time to recovery.

AOF size offset showing unbounded growth of the AOF log:

API error rate showing initial partial recovery at around 12:53 with full recovery at 13:30:

Why did the API keep erroring after Valkey recovered?

Once storage space headroom was added, Valkey unblocked writes, but our API kept responding with a 500 Application Error due to the same MISCONF error, even though Valkey wasn’t returning that error anymore.

A same-version API deployment forced clean connections and cleared those residual errors. We’re looking into what is causing previous errors to get stuck in our Valkey/Redis client library.

What was fixed during this incident?

- Expanded storage by about one-third to restore write headroom.

- Re-deployed the API to clear residual errors.

- Canceled the stuck background save so Valkey could rewrite its AOF and clear out used storage space.

What could we have done better?

- Communication: Investigation and recovery took priority over outward communication. We didn’t provide timely public or internal status updates. That’s on us.

- Observability Resilience: With our primary metrics backend impacted by a badly timed unrelated provider outage, it took longer to piece together the full picture.

- Deployment Safeguards: Our blue/green deployment path didn’t fail fast, roll back, or retry failed API requests.

Long-term fixes and follow-ups

What we’ve already shipped:

- Alerting: New alert rule to detect unbounded AOF growth early.

What we’re actively working on:

- Storage Telemetry for Valkey nodes: Ensure accurate total/free/used storage space reporting is captured either via Valkey or our host monitoring agents, and alert on abnormal storage space usage.

- Blue/green Swap Hardening: Automatically retry failed API operations when performing deployments with our provider.

- Upstream Collaboration: Engage with Valkey developers to identify conditions that led to the background save getting stuck and contribute fixes or mitigations where possible.

When’s the next Jam?

We’re planning on announcing our Spookality plans NEXT WEEK. We’ll make an announcement on our major social media channels when this is ready.

Conclusion

…and that’s it for this Dev Update! See you next time, on September 25.